Table of Contents

- 1. Overview

- 2. Architecture

- 3. The OpenLaszlo Video Client Model

- 4. Establishing a connection to an RTMP sever

- 5. Cameras and Microphones

- 6. Streaming Files over HTTP

- 7. Bidirectional interaction over Real Time Media Protocol (RTMP)

- 8. Installing Video Cameras and Servers

- 9. Comparison of Views and Videoviews for Rendering Audio and Video

This chapter discusses streaming audio and video in .flv and .mp3 formats that are rendered in the specialized <videoview>

. More limited audio and visual capabilities can be obtained by attaching audio and visual sources as resources to regular

<view>

s. For discussion of that topic, see Chapter 18, Media Resources. The video APIs described in this chapter work only in OpenLaszlo applications that are compiled for the Flash runtime target.

OpenLaszlo video APIs give you access to the full functionality of media players such as the Flash Media Server and the Red5 media player. When your OpenLaszlo program is connected to a media server over a Real Time Media Protocol connection, you can not only receive and play audio and video (in mp3 and flv formats), you can also record your own audio and video locally and send it to the server, where it can be stored or shared in real time with other client programs.

In contrast to RTMP, files that are streamed over an HTTP connection allow more limited functionality.

This chapter explains the concepts of controlling streaming media over an HTTP connection and and bi-directional communication with a media server over an RTMP connection.

![[Warning]](../includes/warning.gif)

![[SWF]](../includes/swf.gif)

SWF only: The features described in this section only work in applications compiled to SWF. They do not work in applications compiled to other runtimes.

There are two main ways that OpenLaszlo applications can interact with video media: as a basically passive recipient of streamed video served over HTTP, or, when a media server is present, the OpenLaszlo application can fully interact with the server, capturing video and audio data with local cameras and microphones and sending it back to the media server over RTMP.

Media servers do not only stream media content to the Flash plugin, they can also send instructions to be executed on the client and other kinds of data. Servers can receive video, audio and data from an OpenLaszlo application and either save it or rebroadcast it. These APIs allow you to manipulate the source video content on the client—for example, to rotate it, change its transparency, seek forward and back, and so forth.

This functionality makes possible entirely new types of web applications. For example:

-

Video on demand

-

Video mail

-

Multi-user video chat

-

Video sharing and publishing

-

Conferencing

-

Broadcasting live streams of concerts

-

Recording audio

OpenLaszlo APIs implement an abstraction layer so that you can use the same classes to manipulate video data regardless of

its source. For example, you use a <videoview> to contain video data, regardless of the protocol (HTTP or RTMP) over which the data comes. The <mediastream>

associated with that <videoview> determines its properties.

Depending on whether your application is a simple receptor of streams or a sender and receiver, the architecture of the application may be simple or complex. In the simplest case, the LZX application merely catches and displays streamed files, and your programming options are limited. In the more complex case, for example, a multi-point video chat, your application may be considered to have a central server component and any number of clients, which communicate with each other through the server. In such applications you need to handle such things as receiving and displaying streamed data, recording and broadcasting from local microphones and cameras, seeking forward and back in the stream, and so forth. We'll examine some of these cases in examples below.

LZX videoviews can stream content in FLV or Mp3 format. According to the Wikipedia,

FLV (Flash Video) is a proprietary file format used to deliver video over the Internet using Adobe Flash Player (also called Macromedia Flash Player) version 6, 7, 8, or 9. FLV content may also be embedded within SWF files. Notable users of the FLV format include Google Video, Reuters.com, YouTube and MySpace. Flash Video is viewable on most operating systems, via the widely available Macromedia Flash Player and web browser plug-in, or one of several third-party programs such as Media Player Classic (with the ffdshow codec installed), MPlayer, or VLC media player.

The ubiquitous Mp3 format is described:

MPEG-1 Audio Layer 3, more commonly referred to as MP3, is a popular digital audio encoding and lossy compression format, designed to greatly reduce the amount of data required to represent audio, yet still sound like a faithful reproduction of the original uncompressed audio to most listeners. It was invented by a team of German engineers who worked in the framework of the EUREKA 147 DAB digital radio research program, and it became an ISO/IEC standard in 1991.

Video content can be communicated from the server using either of two protocols:

-

HTTP

-

Real Time Media Protocol(RTMP)

Depending on where the media is being served from and what protocol connects the OpenLaszlo client to the sever, different capabilities are available in the client application.

HTTP, the HyperText Transfer Protocol, is useful for downloading files to a client. However it's not interactive and has no special provisions for handling data in video format. When you load an URL that identifies a .flv or mp3 file, that file is downloaded. You have some control over when to start playing the download, but that's about it. From the point of view of the content provider, the most obvious value of using HTTP is that it requires no special media server. Also, HTTP is useful when bidirectional communication is not needed because videos downloaded on this protocol start fast.

When you download a file over HTTP, the entire file is loaded into memory. Once in memory you can quickly seek. But because the files must all fit into memory, there is a limit on the size of files that you can handle in this way.

RTMP, the Real Time Messaging Protocol, was developed by Adobe (formerly Macromedia), in order to get around the limitations

of HTTP when dealing with bi-directional ("full duplex") video data in real time. RTMP provides APIs for complex interactions,

and because the connection allows you to download portions of the file as needed, you can handle larger files than can HTTP,

which download the entire file. With RTMP there is less memory usage, as only one frame is loaded into memory at a time.

RTMP has been optimized for video, and has better compression rates. In order to use the RTMP protocol, you must establish

a connection to an application on a server. You do this using the <rtmpconnection>

tag.

For more information on the RTMP protocol, see Adobe and OS Flash sites.

Each of these protocols has its uses. The RTMP protocol, coupled with a media server, provides a much more rich environment for creating interactive media applications. On the other hand HTTP requires no special media server software, and for many simple streaming applications it provides faster startup.

OpenLaszlo capabilities on the client are provided through a group of base classes and through two components that are built on top of these base classes.

The <videoview> is the visual object that is used to display audio and visual data. The <mediastream> associated with a <videoview> tells it where and how to get its content. You can attach devices to the <videoview>; as of OpenLaszlo 3.4 the two supported devices are <camera>

and <microphone>

. The classes <camera> and <microphone> are implemented as extensions of the base class <mediadevice>

.

<videoview> is an extension of <view>. It's a visual object whose height, width, placement, etc, you can control just as you would any other view.

The <videoview>

is a subclass of <view> that is optimized for audio/visual streaming. In addition to the attributes inherited from <view>, this class has additional attributes that allow you to, for example identify a camera and microphone associated with it,

to control whether media starts playing immediately or not, to determine play rates, and so forth.

Notice that unlike <view>, you cannot attach a resource to a <videoview>.

<videoview> has methods parallel to those on <resource>

. For example, you use play() and stop() to control the video playback.

When you create a <videoview> and pass an URL and type (http or rtmp) to it, a stream is created, by default. If you wish to have more control over the

stream, you can define a <mediastream>.

The <mediastream>

tag allows you to identify the type of stream to be associated with a given <videoview>. Using attributes of and methods on the <mediastream>, you can, for example, determine the frame rate and current time of the stream, set it to record or broadcast and so forth.

OpenLaszlo provides two upper level components for managing connections and video objects.

The <rtmpstatus>

component provides a visual indication of the status of the rtmp connection.

This element causes a small indicator "light" to show the status of the connection:

-

red: no connection

-

green: working connection

The example below shows this component.

Example 42.1. Trivial rtmpstatus example

<canvas height="40" width="100%">

<simplelayout spacing="5"/>

<text text="The indidcator light is red because there is no rtmp connection."/>

<rtmpstatus/>

</canvas>

<!-- * X_LZ_COPYRIGHT_BEGIN ***************************************************

* Copyright 2007, 2008 Laszlo Systems, Inc. All Rights Reserved. *

* Use is subject to license terms. *

* X_LZ_COPYRIGHT_END ****************************************************** -->

An <rtmpconnection>

represents a connection to an application running on an RTMP server, such as the Flash Media Server or Red5, enabling two-way

streaming of audio and video. This allows you to broadcast and receive live audio and and or video, as well as recording video

from a webcam or audio from a microphone to files on the server. Recorded files may be played back over HTTP (using <mediastream> and <videoview> classes) or with RTMP to allow seeking within and playback of long files that are impractical to load into memory.

OpenLaslzo implements the <camera> and <microphone> objects as subclasses of the <mediadevice>

base class. Most of the methods and attributes that you use to control cameras and microphones are inherited from the base

class. In order to ensure the privacy of computer users, camera and microphone objects must explicitly obtain permission from

the user before they can be turned on (this ensures that people are not being spied upon without their knowledge).

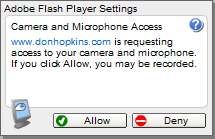

When your program instantiates a camera or microphone object, the Flash Player causes a dialogue to be appear on the screen.

If the person using the application indicates that permission has been granted, the allowed attribute for every device is set to true>

Note that it is not possible to allow permission on one device and not another. It's an all-or-nothing proposition. You grant access to all cameras and microphones, or to none.

Here's an illustration of a representative security dialogue the first time the camera is requested:

You can change the permissions of a running application by using the right-click context menu on the video. Note that the menu may show the name of device drivers, not the actual devices. A typical right-click menu is shown below.

Once you have attached a microphone and/or camera to a videoview and received permission from the user to turn them on, the

application turns them on by setting capturing to true.

Example 42.3. Turning microphone and camera on

<rtmpconnection src="rtmp://mysite.com/myapp/" autoconnect="true"/>

<videoview id="v" url="test.flv" type="rtmp">

<camera show="true"/>

<microphone capturing="true"/>

</videoview>

<!-- a progress indicator bar proportional to stream time-->

<view bgcolor="black" width="${v.stream.time/180*v.width}"/>

<!--methods on the stream automatically created by the videoview -->

<button text="record" onclick="v.stream.record()"/>

<button text="stop" onclick="v.stream.stop()"/>

You can have more than one camera associated with a videoview. The following example shows how to use the index attribute of the of the camera object to control which camera is in use:

Example 42.4. Selecting which camera to use

<rtmpconnection src="rtmp://mysite.com/myapp/" autoconnect="true"/>

<videoview x="10" id="v" url="test.flv" type="rtmp">

<camera show="true" index="2"/>

<microphone name="mic" capturing="false"/>

</videoview>

<view bgcolor="green" width="10" height="${v.mic.level/100*v.height}"/>

To show a video from http server and play it automatically:

Example 42.5. Video Display over HTTP

<videoview url="http://mysite.com/myvideo.flv" autoplay="true"/> <videoview url="myvideo.flv" autoplay="true"/>

The Real Time Media Protocol, RTMP, is designed to handle efficiently high speed communication of audio and video information between a client application and a media server.

Here are some general guidelines for setting up video cameras and servers for OpenLaszlo applications. You should of course consult the documentation for the individual servers too.

Install the Flash Media Server in:

C:\Program Files\Macromedia\Flash Media Server 2\

Create the test application directly and subdirectories:

C:\Program Files\Macromedia\Flash Media Server 2\applications\test C:\Program Files\Macromedia\Flash Media Server 2\applications\test\streams C:\Program Files\Macromedia\Flash Media Server 2\applications\test\streams\instance1

Copy the flash video test files into the test\streams\instance1 directory, from:

$LZ_HOME/test/video/videos/*.flv

If the media server fails to start on Windows, check to make sure that Emacs or another text editor did not change the ownership and permission of the configuration files. You may have to, for example, change permission on some of the Flash Media Server xml configuration files because the Flash server (which ran as another user) could not read them and would not start.

If the media server fails to work on Linux, make sure that you have the shared libraries from Firefox installed in /usr/lib. If you're missing the libraries, the server will run and appear to be working, and the admin interface actually will work, but none of the streaming video works. When you run the flash server startup command ("./server start"), it will complain about missing libraries. If this happens, download Firefox and copy all its shared libraries to /usr/lib.

http://livedocs.macromedia.com/fms/2/docs/wwhelp/wwhimpl/common/html/wwhelp.htm?context=LiveDocs_Parts&file=00000009.html

OpenLaszlo applications can communicate with the Red5 media server, an open source Flash media server that uses rtmp. For more on Red5, see their website.

There is a QuickCam "Logitech Process Monitor" server (LvPrcSrv.exe) that interferes with Cygwin, the one that substitutes computer generated characters for the video stream, who track your motion and facial expressions. It causes cygwin to fail forking new processes. This manifests itself by build processes mysteriously failing, and Emacs having problem forking sub-processes in shell windows. You have to disable the server to make Cygwin work again.

http://blog.gmane.org/gmane.os.cygwin.talk http://www.cygwin.com/ml/cygwin/2006-06/msg00641.html

You control playing and stopping of the videostream by using the play() and stop() methods on the stream.

Example 42.6. Adding playback controls on a stream

<rtmpconnection src="rtmp://mysite.com/myapp/" autoconnect="true"/> <videoview id="v" url="http://mysite.com/myvideo.flv"/> <!--should stream be streamname?--> <button text="play" onclick="v.stream.play()"/> <button text="stop" onclick="v.stream.stop()"/>

In the example below, two videoviews are communicating with each other through a media server located at 127.0.0.1/test over the RTMP protocol. Each view specifies an URL to the other.

Example 42.7. A simple multi-party video application

<rtmpconnection src="rtmp://127.0.0.1/test" autoconnect="true"/>

<simplelayout/>

<rtmpstatus/>

<view layout="axis:x; inset:10; spacing:10">

<videoview id="live" url="me" type="rtmp" oninit="this.stream.broadcast()" >

<camera show="true"/>

</videoview>

<videoview id="vp" url="you" type="rtmp" oninit="this.stream.play()"/>

</view>

Example 42.8. control of Muting, Recording, Broadcasting

<canvas debug="true">

<rtmpconnection

src="rtmp://127.0.0.1/test"

autoconnect="true"

/>

<mediastream name="s1"

type="rtmp"

/>

<mediastream name="s2"

type="rtmp"

/>

<simplelayout/>

<text multiline="true" width="100%">

Instructions:<br/>

1. Either run a flash media server on localhost (127.0.0.1), or ssh tunnel to a media server at a known host<br/>

2. Press the broadcast button. (Grant camera access permission if needed.)

The button should change to say "stop broadcasting"<br/>

3. Press the receive button. You should be receiving audio and video from yourself and the

button should say "stop receiving."<br/>

4. Try out the audio and video mute buttons. The video mute should freeze the received picture.

The audio mute should silence the received sound.<br/>

5. Press the receive button. The received video should freeze and the button should say "stop receiving".<br/>

6. Press the receive button again. The video should resume and the button should say "receiving".<br/>

7. Press the broadcast button. The received video should freeze and the button should say "broadcast".</br>

</br>

The indicator below shows the status of the video connection.

</text>

<rtmpstatus/>

<view

layout="axis:x; inset:10; spacing:10"

>

<view id="v1"

layout="axis:y; spacing:4"

>

<videoview id="live"

type="rtmp"

stream="$once{canvas.s1}"

>

<camera id="cam"

show="false"

/>

<microphone id="mic" capturing="false"/>

</videoview>

<edittext name="username">YourName</edittext>

<button

text="broadcast"

>

<attribute

name="text"

value="${(s1.broadcasting == false) ? 'broadcast' : 'stop broadcasting'}"

/>

<handler name="onclick"><![CDATA[

if (cam.show == false) {

live.stream.setAttribute('url', parent.username.text);

live.stream.broadcast();

cam.setAttribute('show', true);

} else {

live.stream.stop();

cam.setAttribute('show', false);

}

]]>

</handler>

</button>

<checkbox onvalue="s1.setAttribute('muteaudio', value)">Mute Audio</checkbox>

<checkbox onvalue="s1.setAttribute('mutevideo', value)">Mute Video</checkbox>

</view>

<view id="v2"

layout="axis:y; spacing:4"

>

<videoview name="vid"

type="rtmp"

stream="$once{canvas.s2}"

/>

<edittext name="username">YourName</edittext>

<button

text="${s2.playing ? 'stop receiving' : 'receive'}"

onclick="s2.setAttribute('url', parent.username.text);

if (s2.playing) s2.stop(); else s2.play();"

/>

</view>

</view>

</canvas>

Audio and video files that are attached as resources to regular <view>s are not described in this chapter. However, here is a brief discussion of how they differ in terms of designing applications.

If you attach a resource to a view it's compiled into the swf, making the initial swf size larger, but then when the swf is fully loaded it is available to play instantaneously when needed.

If you stream the mp3 it will usually be easier on the memory, but timing will be less reliable, as the player has to buffer the downloaded file. For example, consider how you might build a video editor. If you had two video clips on a server and you wanted to use two video views to overlay on top of one another so you could create a transition from one to the other (creating a virtual video editor), you could monitor the first video so you know when to start the second. However, the appearance of this transition would be unpredictable. If you were tell the second video to play while fading from one video view to another, the amount of time before the second video were to play would depend on the buffer amount and bandwidth, not on time, so you would not be able to pre-load it and pause it in order to control the precise moment for the second video to start playing.

So you'd use the first approach, using files transcoded to .swf and attached as resources to <view> for mouse clicks and other places where precise timing is important and you'd use the second approach, streaming to <videoview>s for an mp3 or video player where the size of the file and memory efficiency becomes important.